Introduction

At Lynxmind, we’ve always believed that real innovation happens when technology is in your own hands. This time, we decided to take that quite literally.

We built our own on-premise AI automation server, from assembling the hardware to deploying an entire AI + workflow orchestration stack to power internal automation at zero subscription cost, with full control of our private data.

Assembling LYNXMIND

It brought me back to my teenage years, when I’d spend hours assembling gaming PCs and optimizing every setting for performance.

But this time, the goal was far more ambitious, to design a production-grade AI automation server that would give Lynxmind complete independence and control.

Hardware specs:

- Motherboard: Asus TUF Gaming A620M-Plus (Micro-ATX)

- Processor: AMD Ryzen 7 8700G “Zen 4” (8 cores, 4.2–5.1 GHz, 24 MB cache)

- Memory: 128 GB Corsair Vengeance RGB DDR5 6000 MHz (CL30)

- Storage: 4 TB WD Green SN350 (2× 2 TB NVMe PCIe 3.0 ×4)

And yes, it glows beautifully when it runs.

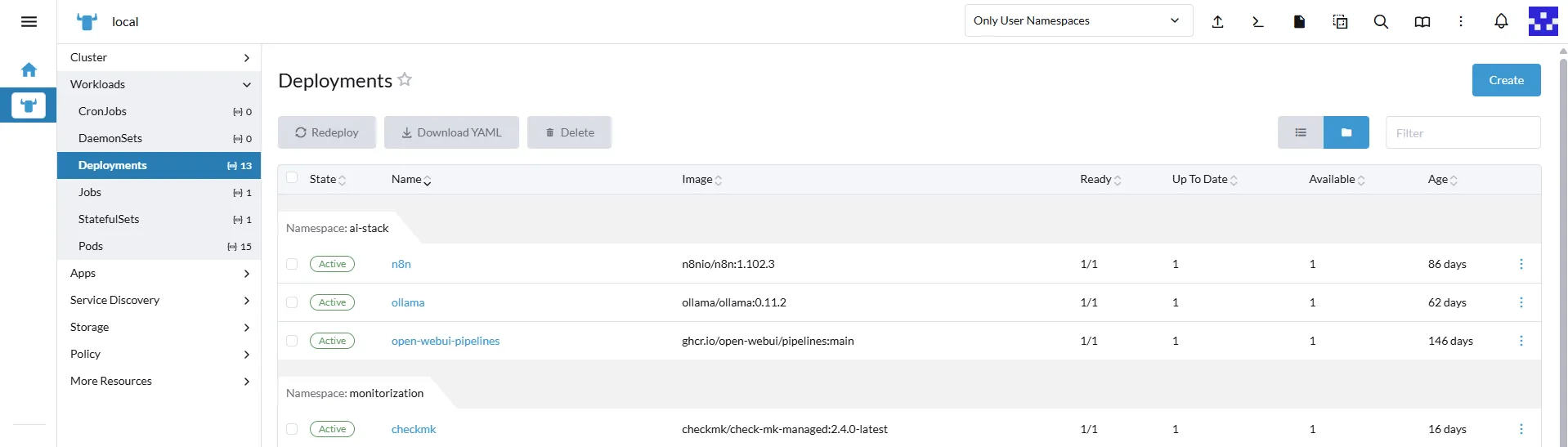

The Foundation: Ubuntu + Kubernetes + Rancher

On top of this hardware, we installed Ubuntu 24.04 LTS (Kernel 6.14.0–29-generic) and set up a lightweight Kubernetes cluster using K3s, managed via Rancher.

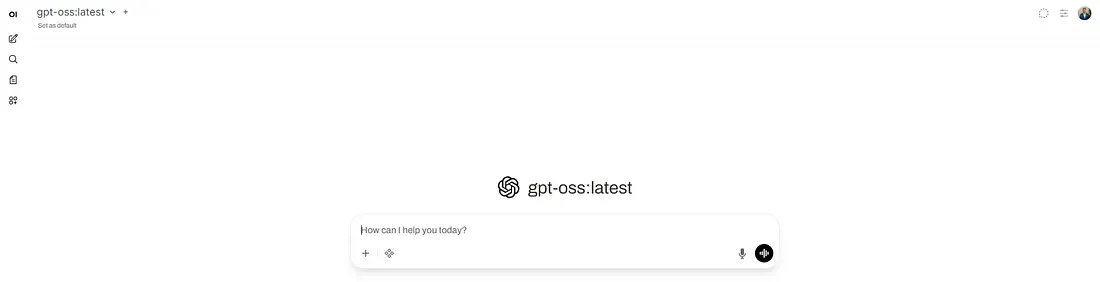

Our Private LLM Stack: Ollama + Open WebUI

Instead of relying on external APIs, we installed Ollama, a self-hosted model runner that lets us deploy and run LLMs locally. For this experiment, we’re using gpt-oss:20b, an open-source model with excellent reasoning and summarization capabilities, ideal for tasks where privacy and cost control matter.

We added Open WebUI on top to provide a simple chat-style interface to the models. Together, this pair gives us an internal, token-free and fully private AI environment.

Automation Layer: Self-Hosted n8n

Next, we deployed n8n, an open-source automation platform, inside the same Kubernetes environment.

It acts as the connective tissue between our services and our local AI models, capturing events, transforming data and executing logic through a simple drag-and-drop interface.

The biggest wins:

- 💰 Zero costs: no tokens, no subscription tiers.

- 🔒 Private data: every prompt, file and result stays inside our infrastructure and is not exposed.

Automating How We Process Talent

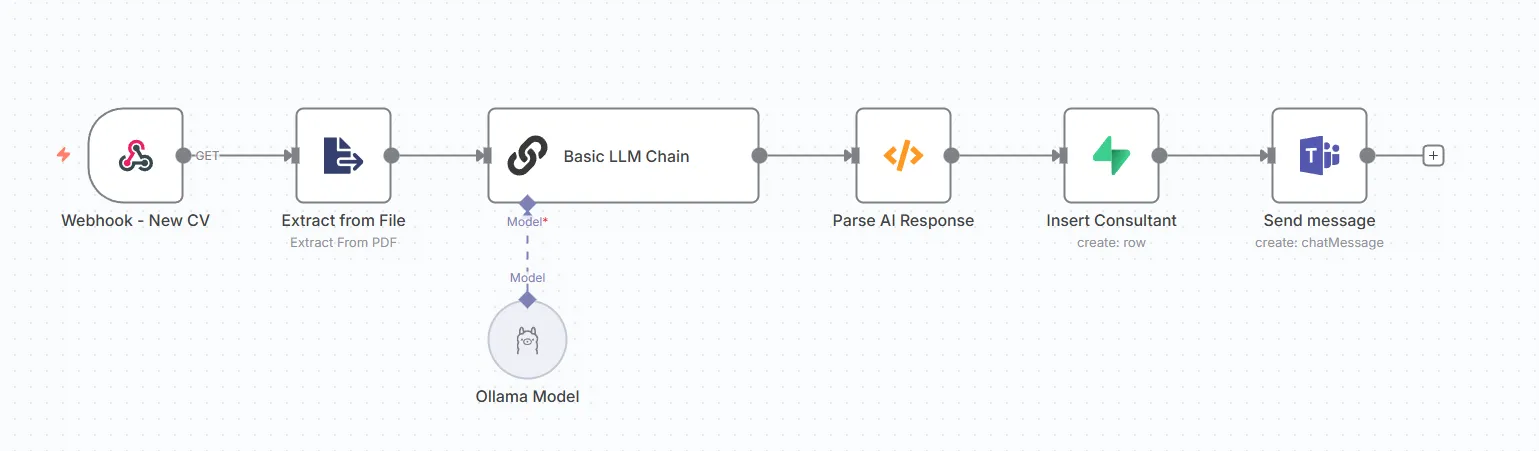

One of the first challenges we decided to tackle with Lynxserver was something very real for any growing company, the endless flow of candidate CVs.

Until then, reviewing CVs was a manual, time-consuming process. Each new application meant opening files, scanning for keywords and trying to remember who had experience with what. It was slow, inconsistent and definitely not the best use of our time.

So, we built a system to fix that.

Now, when a new CV arrives, whether by email or upload, n8n springs into action. It automatically extracts the attachment, converts it from PDF to text and sends it to our local LLM running in Ollama. The AI then reads the document and produces a structured summary: key skills, years of experience, seniority level and even a short description of the candidate’s profile.

That structured data is then inserted directly into Supabase, our internal PostgreSQL-based database also self-hosted on Kubernetes. From there, we can instantly search and filter candidates by capability, all without anyone manually reading a single CV.

Finally our Human Resources team receives a message about the processed workflow.

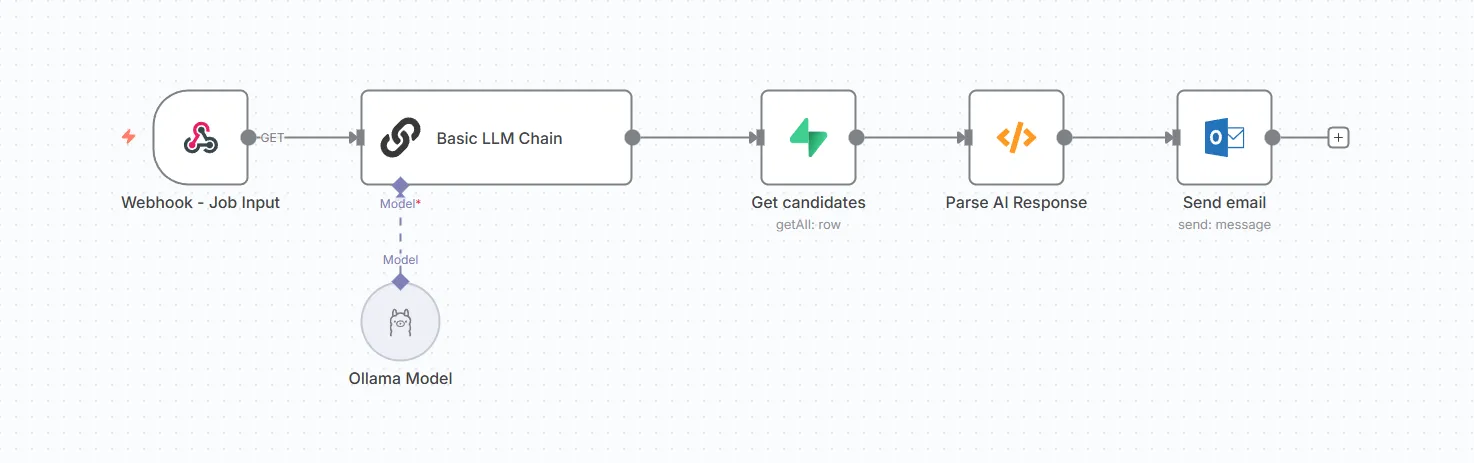

Finding the Perfect Match Instantly

We didn’t stop there. Once the candidates were structured in the database, we flipped the workflow.

When a new job opening is created, n8n sends the description to Ollama, which identifies the core required skills and experience.

Then, using Supabase queries, the system automatically compares those requirements against the stored candidate profiles and returns a ranked list of the best matches complete with a match score and summary.

What used to take hours of reading now happens in seconds, privately, inside our own infrastructure. It’s like having an intelligent recruiting assistant, one that never sleeps and never leaks data.

Closing thoughts

This project reminded me why I fell in love with technology in the first place.

From assembling the hardware to watching Kubernetes nodes light up, it felt like building my first gaming rig, except this time, it powers something far greater.

At Lynxmind, we’re proving that AI doesn’t have to live in someone else’s cloud.

Stack Summary

- Hardware: Custom-built PC (Ryzen 7 8700G, 128 GB RAM, 4 TB NVMe)

High-performance, energy-efficient local compute - Operating system: Ubuntu 24.04 LTS

Stable, developer-friendly Linux distribution - Orchestration: K3s + Rancher

Lightweight Kubernetes and UI management - AI runtime: Ollama + Open WebUI

Local LLM hosting and interaction - Automation platform: n8n

Visual workflow automation - Database: Supabase (PostgreSQL)

Candidate and job data storage